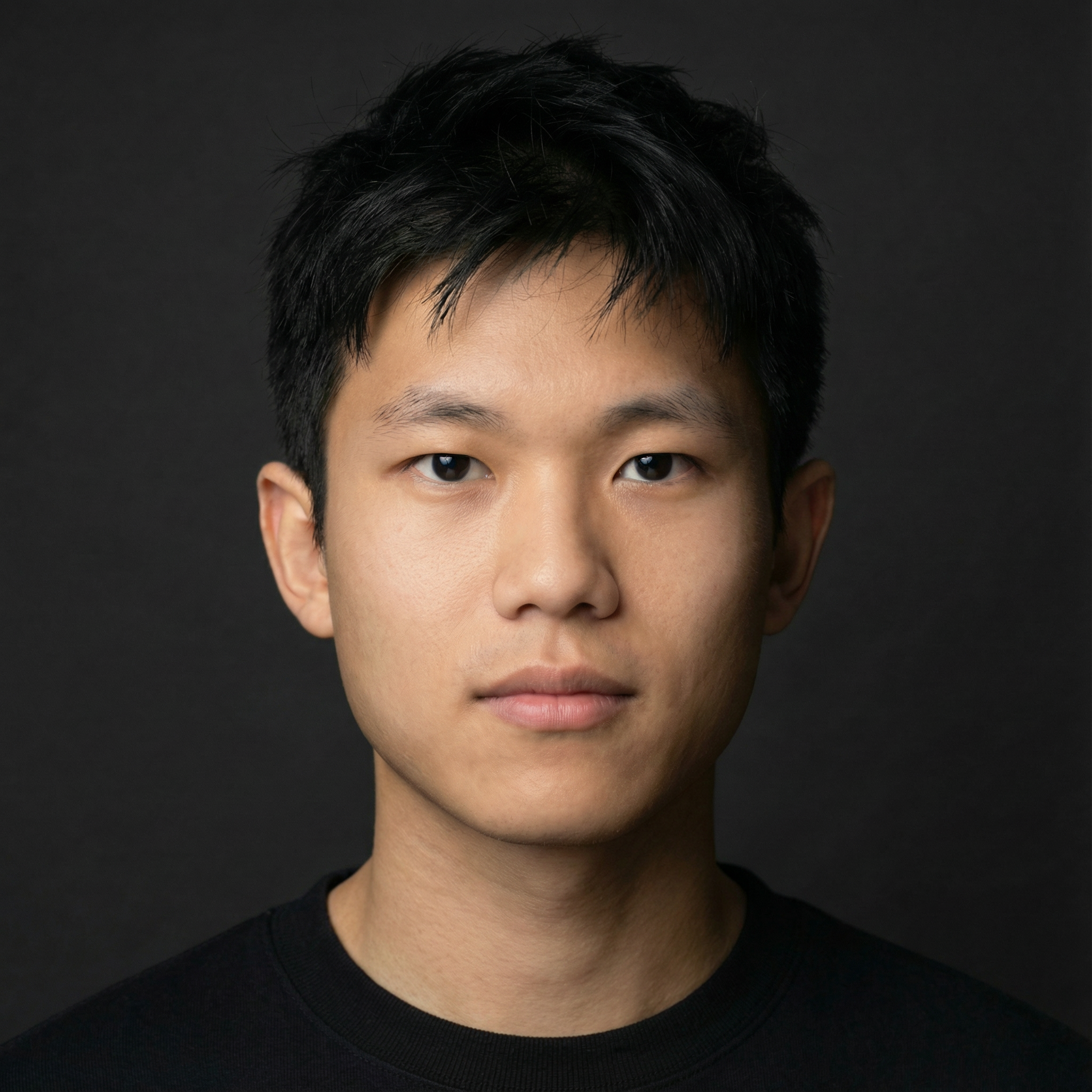

Jeremy Z Yang

Assistant Professor of

Business Administration

at Harvard Business School

home. cv. research. talks. teaching.

thoughts. personal. failed projects.

failed projects

Just a note on projects that I put some serious effort into but didn’t work out. It’s a public good for us as researchers to make this process more transparent and share what we’ve learned from it. This is along the same line as Melanie Stefan’s Nature article and Ben Olken’s epic failures except that mines are not published in Nature or nearly as epic. I hope that I don’t have to update this page as frequently as my actual CV.

The Value of Connected Store

This was my first real research project, I started working on it in my first summer in the program. We worked with a startup that built a system that allows customers to use an in-store tablet to scan a product label and browse additional information about that product and also related products in a retail store. It’s like mixing the experience of physical retail stores with Amazon. We were interested in the treatment effect of this system on different business outcomes such as number of visitors, revenue, the variety of items sold, etc. We proposed a staggered design that randomizes the cross-over timing from control to treatment for different stores of the same brand. I still think this is a pretty cool design and if we find an effect it’d help the company in their next pitch. But the experiment never happened. While the startup had been very supportive the whole time, the retailer wasn’t quite so. At first, our proposal got pushed down from a store-level study to a product-level study within one store, then it got pushed to a section within that store, then the retailer stopped replying to our emails… Still not quite sure what happened on their end as I type this. I guess life happened. If I were to do this again I’d attend every meeting not just between us and the company, but also between the company and the retailer. Perhaps some key messages got lost in translation.

Does Double Counting Explain Illusory Truth Effect (ITE)?

I got the idea when I was reading related papers in a seminar. The illusory truth effect is a very robust result that shows repeated exposure to a statement makes people think it’s more true, regardless of whether the statement is true or not. The leading explanation is that people use familiarity as a proxy for truth, and repeated exposure increases familiarity hence increasing perceived truthfulness. But information might also play a role here. Suppose a receiver hears the same statement from two independent sources, then a Bayesian should rationally update her belief towards the direction of the statement. In standard ITE experiments, the repetition doesn’t provide any additional information, but maybe the subjects think they do. Maybe they forget about it and therefore are double counting? So to test this I ran a very simple experiment on MTurk following the standard ITE manipulations with an additional condition: a reminder that tells people explicitly that they are seeing the same statements. The prediction is that, if ITE is partially driven by double counting, then the reminder should reduce the size of ITE compared to a control condition without the reminder. What did I find? Well, I replicated the ITE but the reminder didn’t work. Subjects are just as likely to rate a statement as true after repeated exposure even when being explicitly told the statement is repeated.